Context

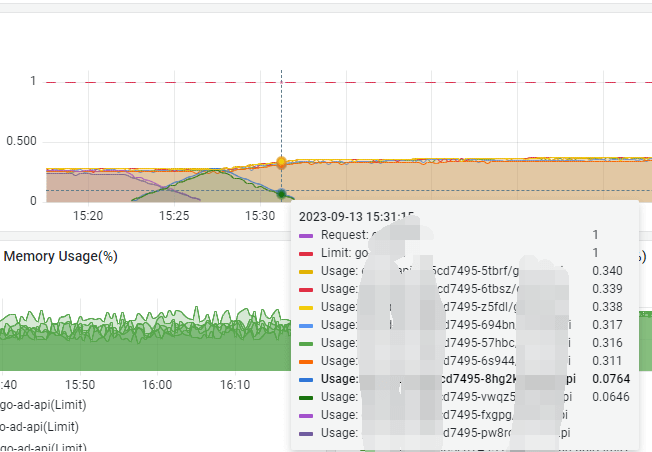

Our CTO mentioned during a code review that our services run on 0.5-core Kubernetes pods and advised adjusting runtime parameters. Here’s what happened next:

- Observation: Our Go services run on pods with 0.5 CPU cores but reported

GOMAXPROCS=8(matching the host machine’s 8-core configuration). - Problem: This mismatch caused excessive CPU utilization due to thread contention.

- Solution: Using Uber’s automaxprocs to align

GOMAXPROCSwith the pod’s actual CPU limit resolved the issue.

Why Does GOMAXPROCS Matter in Containers?

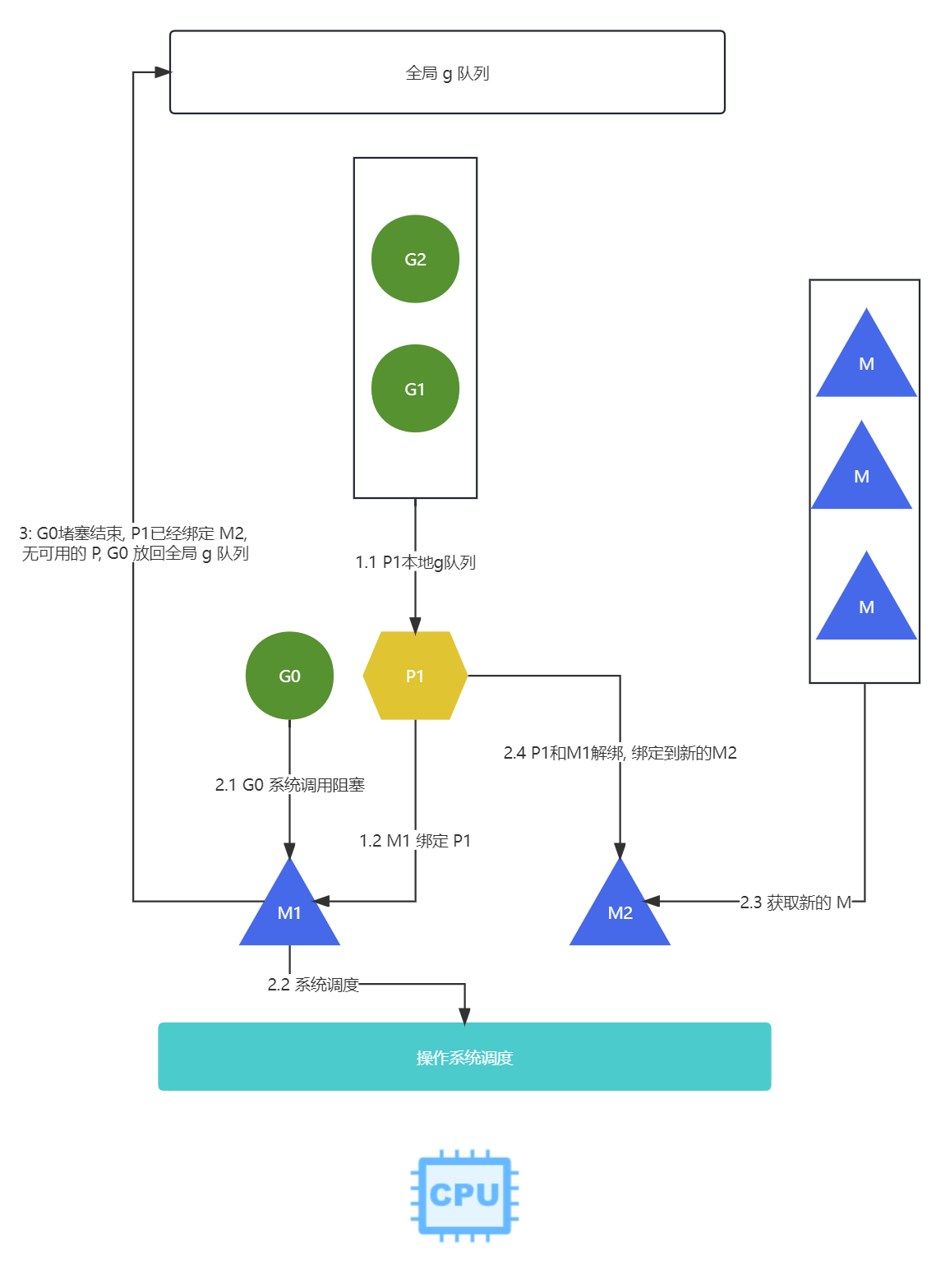

The GMP Scheduler in Go

- G (Goroutine): Lightweight thread managed by Go runtime.

- M (Machine): OS-level thread.

- P (Processor): Virtual resource determining concurrency capacity.

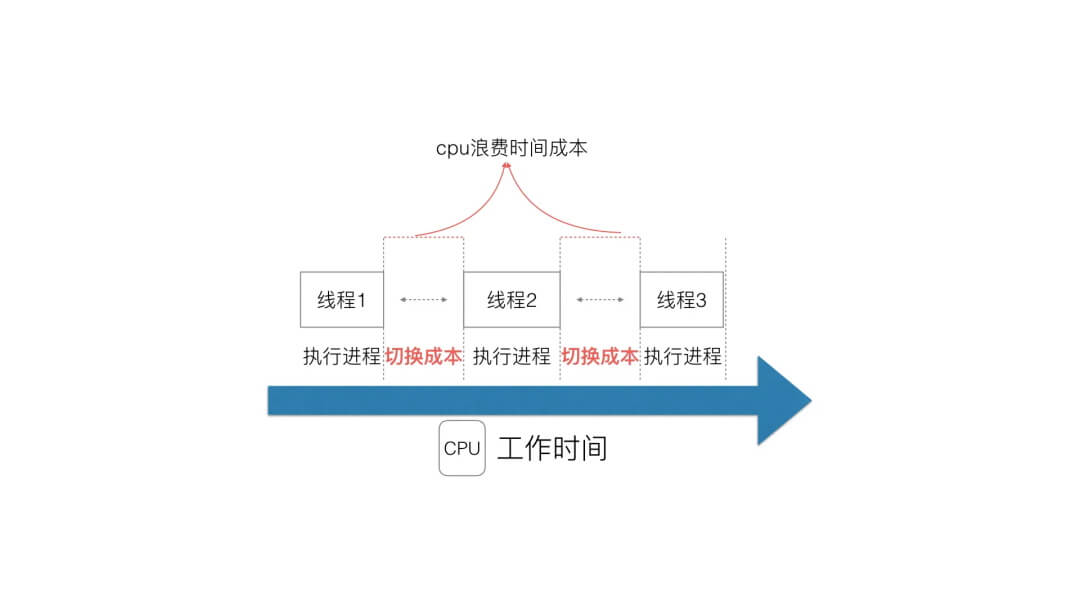

When GOMAXPROCS is set higher than the actual CPU cores (e.g., 8 in a 0.5-core pod):

- Go creates 8 Ps, each competing for limited CPU time.

- Frequent thread switching (context switching) wastes CPU cycles.

Q: If GOMAXPROCS=1, Can Go Still Handle High Concurrency?

Yes! Here’s why:

-

Network I/O Efficiency:

- Go uses

epoll(Linux),kqueue(macOS), oriocp(Windows) for non-blocking I/O. - Network operations don’t occupy CPU time during waits.

- Go uses

-

Handling Blocking System Calls:

- When a goroutine makes a blocking syscall (e.g., file I/O):

- The associated M (thread) detaches from P.

- A new M takes over the P to execute other goroutines.

- After the syscall completes, the original goroutine rejoins the scheduler queue.

- When a goroutine makes a blocking syscall (e.g., file I/O):

Key Takeaways

- Always set

GOMAXPROCSto match container CPU limits (useautomaxprocsfor Kubernetes). - Go excels at I/O-bound tasks: Even with limited cores, non-blocking I/O allows high concurrency.

- Avoid overscheduling: Excess Ps in CPU-constrained environments lead to context-switching overhead.