Preface

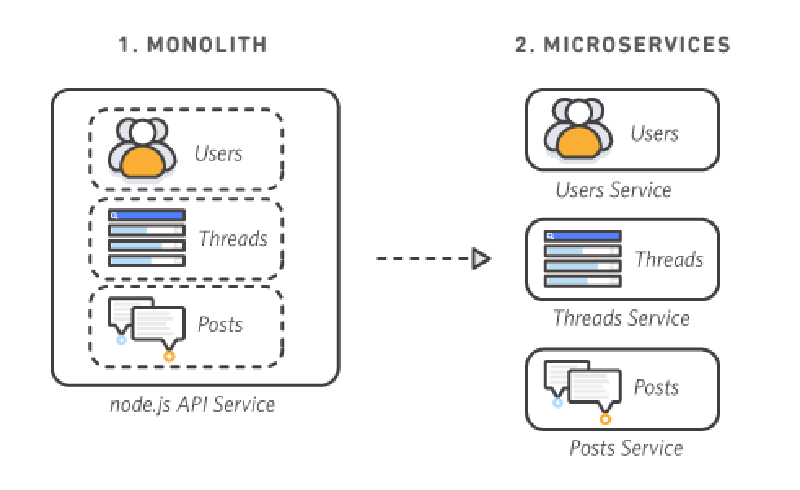

- Although I started working with microservices last year and deployed them on my personal server, they were all using direct connection mode. Today I took the opportunity to upgrade the

go-zerocodebase and revamp the architecture.

Framework

-

I continued using

go-zero(https://github.com/zeromicro/go-zero) which was originally adopted last year, just updating to the latest version. -

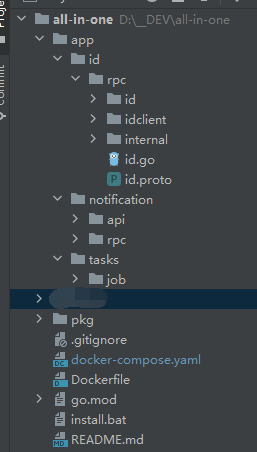

The code structure features a double-layer service under the root

appdirectory, with shared code inpkg(e.g., configuration center access) and a commonDockerfilefor all services.

1.png -

The

Dockerfileuses multi-stage builds. The build argumentSERVICE_PATHspecifies the target service:docker build -t xxx:xx --build-arg SERVICE_PATH=${SERVICE_PATH} .

FROM golang:alpine3.18 AS builder

LABEL stage=gobuilder

ARG SERVICE_PATH

ENV CGO_ENABLED 0

RUN echo -e 'https://mirrors.aliyun.com/alpine/v3.18/main/\nhttps://mirrors.aliyun.com/alpine/v3.18/community/' > /etc/apk/repositories && apk update --no-cache && apk add --no-cache tzdata

WORKDIR /build

COPY . .

RUN go env -w GO111MODULE=on

RUN go env -w GOPROXY=https://mirrors.aliyun.com/goproxy/,direct

RUN go mod download

RUN go build -ldflags="-s -w" -o /build/main /build/app/${SERVICE_PATH}

FROM scratch

ARG SERVICE_PATH

COPY --from=builder /etc/ssl/certs/ca-certificates.crt /etc/ssl/certs/ca-certificates.crt

COPY --from=builder /usr/share/zoneinfo/Asia/Shanghai /usr/share/zoneinfo/Asia/Shanghai

ENV TZ Asia/Shanghai

WORKDIR /app

COPY --from=builder /build/main /app/main

ENTRYPOINT ["./main"]

- To deploy multiple instances on a single machine without port conflicts, we dynamically assign available ports during

go-zerostartup:

func GetAvailablePort() (int, error) {

address, err := net.ResolveTCPAddr("tcp", "0.0.0.0:0")

if err != nil {

return 0, err

}

listener, err := net.ListenTCP("tcp", address)

if err != nil {

return 0, err

}

defer listener.Close()

return listener.Addr().(*net.TCPAddr).Port, nil

}

Service Registration & Discovery && Configuration Center

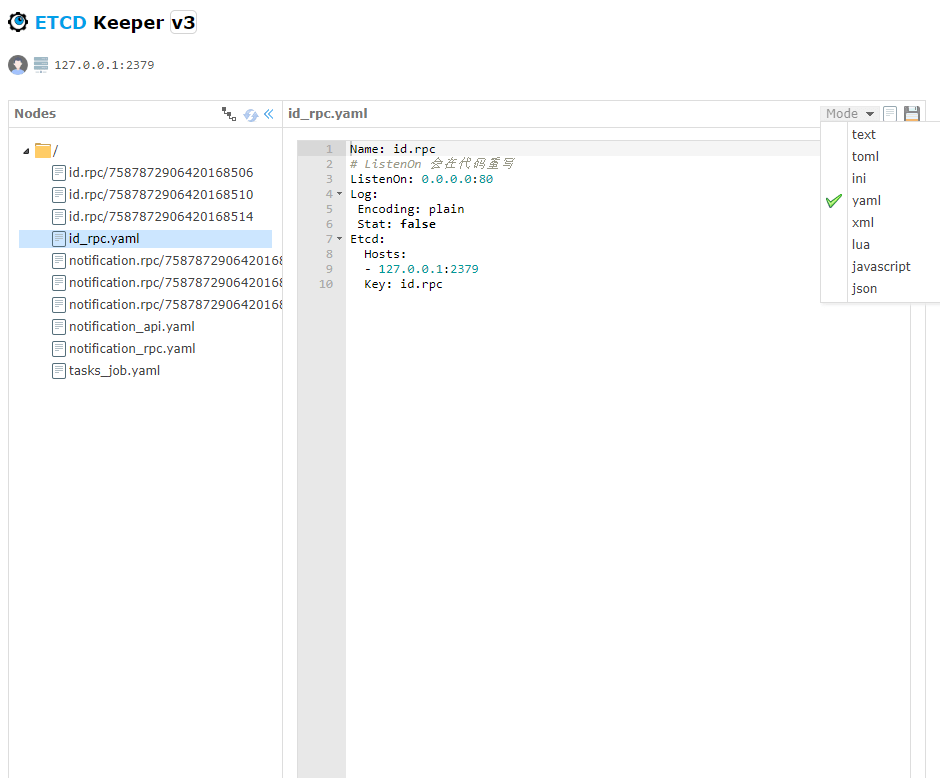

- Key requirements for personal deployment: low cost and reliability.

- Service registration/discovery uses etcd.

- Configuration center also leverages

etcd(configs pulled at startup), managed via etcdkeeper with built-in JSON/YAML support.

docker-composefor infrastructure setup:

version: '3'

services:

etcd:

container_name: etcd

image: bitnami/etcd:3

restart: always

environment:

- ETCDCTL_API=3

- ALLOW_NONE_AUTHENTICATION=yes

- ETCD_ADVERTISE_CLIENT_URLS=http://127.0.0.1:2379

volumes:

- "./data/etcd:/bitnami/etcd/data"

network_mode: host

etcdkeeper:

hostname: etcdkeeper

image: evildecay/etcdkeeper:v0.7.6

network_mode: host

restart: always

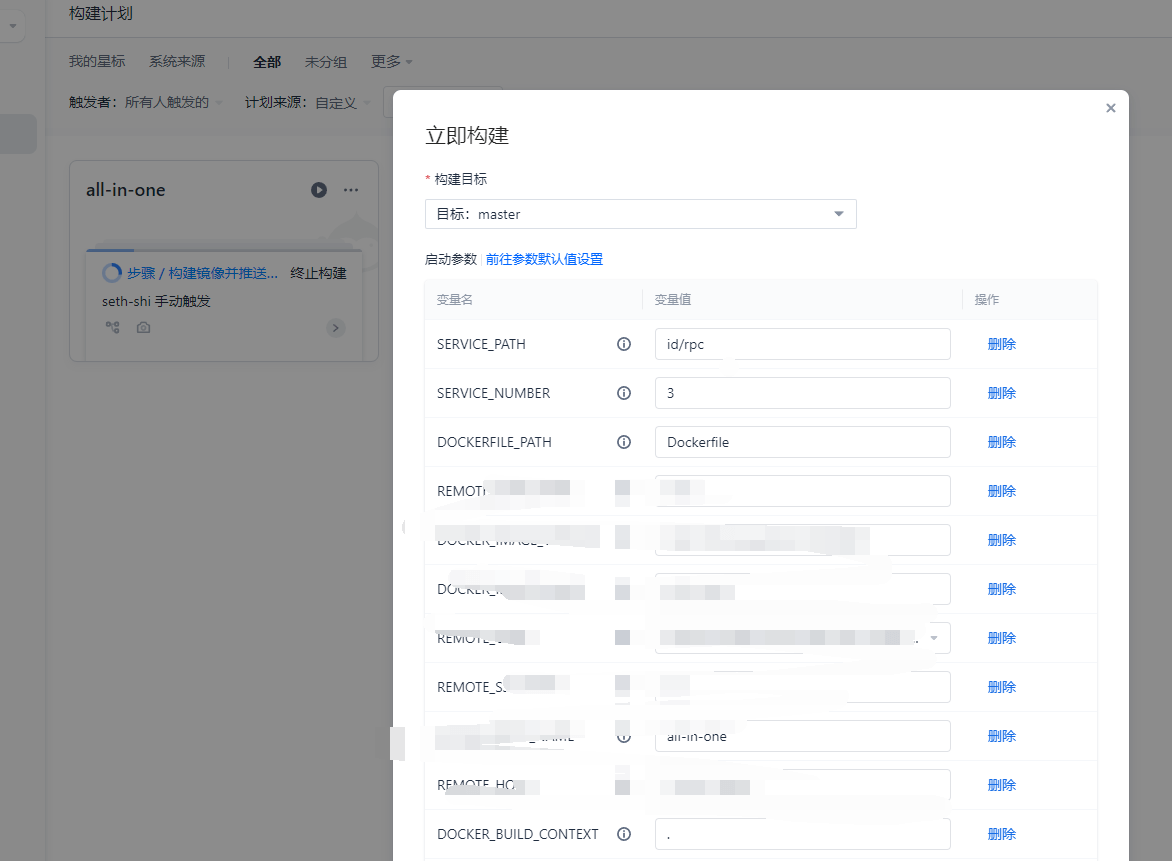

CI

- Using Coding for CI/CD with 10 free build hours/month.

- Environment variables

SERVICE_PATH(build target) andSERVICE_NUMBER(instance count) are injected during builds. - Deployment pseudo-code:

script {

def count = SERVICE_NUMBER.toInteger();

echo "Deploying: ${count} instances"

for (int i = 0; i < count; i++) {

sshCommand(

remote: remoteConfig,

command: "docker run --cpus=0.5 --memory=100m -d --net=host --restart=always --name ${SERVICE_NAME}-${i} ${IMAGE_NAME}:${CI_BUILD_NUMBER}",

sudo: true,

)

echo "${SERVICE_NAME}-${i}..."

}

}

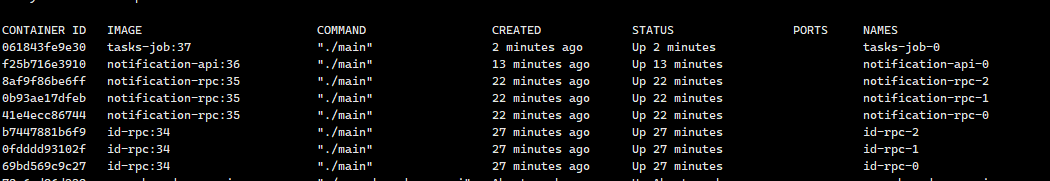

End

- Final deployment shows multiple service instances running: